简介

项目地址:https://github.com/google-gemini/gemini-fullstack-langgraph-quickstart

项目介绍:Gemeni团队使用React前端库,LangGraph后端库做的基于ReAct的Agent Demo

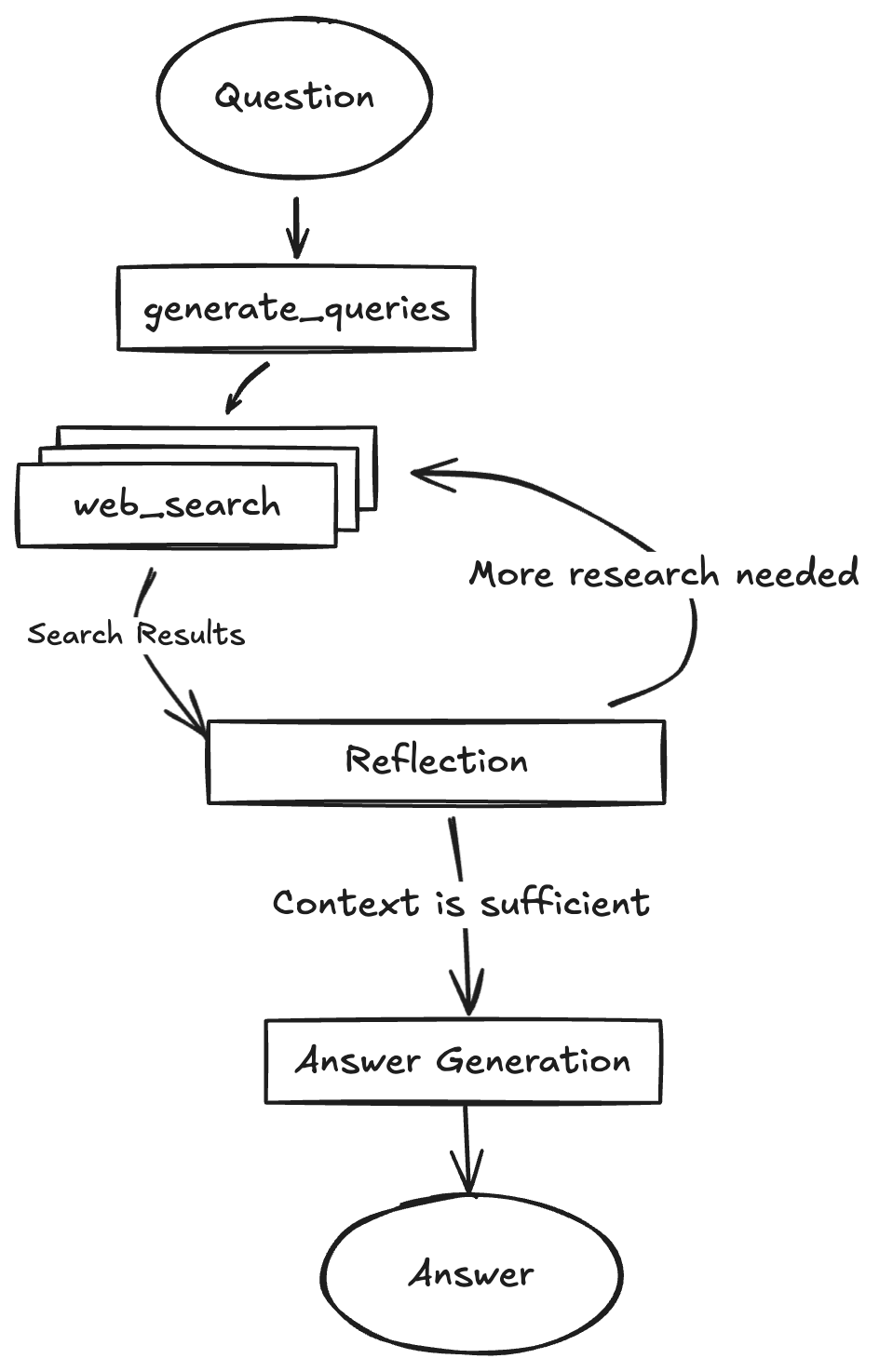

主要功能:基于Gemeni大模型服务,ReAct框架做的一个Web Research的Agent,Agent根据用户问题拆解需要的信息,使用Gemeni with Google Search API搜索相关信息并进行分析,迭代搜索过程直至LLM认为已经可以回答用户问题,将搜索到的信息组合生成最终结果

解析

整体框架采用类似ReAct方式,迭代进行web查询直至认为满足生成条件

生成查询 generate_queries

生成查询过程主要使用LLM的能力,问题生成默认采用gemini-2.0-flash模型,这个模型的主要特点是快,原生多模态支持图文输出,1M上下文Token 8k输出,支持工具调用

调用使用温度 1.0,尽量保持多样性

使用的提示词:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

Your goal is to generate sophisticated and diverse web search queries. These queries are intended for an advanced automated web research tool capable of analyzing complex results, following links, and synthesizing information.

Instructions:

- Always prefer a single search query, only add another query if the original question requests multiple aspects or elements and one query is not enough.

- Each query should focus on one specific aspect of the original question.

- Don't produce more than {number_queries} queries.

- Queries should be diverse, if the topic is broad, generate more than 1 query.

- Don't generate multiple similar queries, 1 is enough.

- Query should ensure that the most current information is gathered. The current date is {current_date}.

Format:

- Format your response as a JSON object with ALL two of these exact keys:

- "rationale": Brief explanation of why these queries are relevant

- "query": A list of search queries

Example:

Topic: What revenue grew more last year apple stock or the number of people buying an iphone

{{

"rationale": "To answer this comparative growth question accurately, we need specific data points on Apple's stock performance and iPhone sales metrics. These queries target the precise financial information needed: company revenue trends, product-specific unit sales figures, and stock price movement over the same fiscal period for direct comparison.",

"query": ["Apple total revenue growth fiscal year 2024", "iPhone unit sales growth fiscal year 2024", "Apple stock price growth fiscal year 2024"],

}}

Context: {research_topic}

|

生成查询部分的提示词主要结构:

- 任务描述:目标是什么,输入是什么,输出干什么用

- 任务详细指令:对输出问题的偏好性限定,应该少、独立、多样、限定数量,此外还有当前时间等基本信息

- 输出格式要求:要求JSON格式,包含原因(引导模型思考),拆解的查询结果

- 示例

- 输入信息:待研究问题,即用户输入的问题

将生成的子问题逐个发送到web_search节点,做进一步的搜索分析

网络搜索 web_search

网络搜索默认依然使用的gemini-2.0-flash模型,温度使用0,尽量保证稳定性,同时指定使用gemeni的google_search工具

1

2

3

4

5

6

7

8

9

10

11

|

Conduct targeted Google Searches to gather the most recent, credible information on "{research_topic}" and synthesize it into a verifiable text artifact.

Instructions:

- Query should ensure that the most current information is gathered. The current date is {current_date}.

- Conduct multiple, diverse searches to gather comprehensive information.

- Consolidate key findings while meticulously tracking the source(s) for each specific piece of information.

- The output should be a well-written summary or report based on your search findings.

- Only include the information found in the search results, don't make up any information.

Research Topic:

{research_topic}

|

同样包含几个关键部分:

- 任务描述:收集材料并综合成可验证的文本,要求仍然简单明确

- 任务详细指令:要求收集的信息 新、多样,根据收集的内容 摘要总结、能溯源、不编造

- 输入信息:历史对话上下文

相对于问题的拆解,这里因为不需要格式化的输出,所以没有输出要求以及示例

根据模型返回的内容进一步加工处理对内容增加标记等信息

反思检查 reflection

默认使用的gemini-2.5-flash模型,gemini-2.5-flash是gemini-2.0-flash的升级版,2.5引入混合推理架构(支持开启/关闭思考推理功能),可以设定推理深度(0–24576 tokens),可以根据性能和质量要求动态调整,关闭推理性能比2.0更快。反思检查使用temperature 1.0

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

You are an expert research assistant analyzing summaries about "{research_topic}".

Instructions:

- Identify knowledge gaps or areas that need deeper exploration and generate a follow-up query. (1 or multiple).

- If provided summaries are sufficient to answer the user's question, don't generate a follow-up query.

- If there is a knowledge gap, generate a follow-up query that would help expand your understanding.

- Focus on technical details, implementation specifics, or emerging trends that weren't fully covered.

Requirements:

- Ensure the follow-up query is self-contained and includes necessary context for web search.

Output Format:

- Format your response as a JSON object with these exact keys:

- "is_sufficient": true or false

- "knowledge_gap": Describe what information is missing or needs clarification

- "follow_up_queries": Write a specific question to address this gap

Example:

{{

"is_sufficient": true, // or false

"knowledge_gap": "The summary lacks information about performance metrics and benchmarks", // "" if is_sufficient is true

"follow_up_queries": ["What are typical performance benchmarks and metrics used to evaluate [specific technology]?"] // [] if is_sufficient is true

}}

Reflect carefully on the Summaries to identify knowledge gaps and produce a follow-up query. Then, produce your output following this JSON format:

Summaries:

{summaries}

|

这个提示词结构与2.0的略有差别,不知道是不是经过调优后最佳的方式:

- 角色定义:You are an expert… 不知道这种催眠式句式是否对2.5有更好的表现

- 任务详细指令:如果有欠缺识别欠缺的知识并生成新的查询问题,关注技术实现细节和新兴趋势

- 要求:这应该是基于调测优化增加的限制约束,保证生成的后续查询的质量,“确保生成的后续查询是自包含的,并且包含web搜索所必须的上下文”

- 输出格式:为方面后面的判断是继续搜索还是结束生成,定义了标准的json格式输出

- 示例:这个示例中只包含响应结构,主要让模型理解结构与取值

- 任务描述:这里任务描述放在了后面,放在这里主要离输出格式近一点,猜测更方便模型注意力聚焦

- 输入信息:包含引用信息的网络搜索生成的结果

生成结果 answer_generation

汇聚各个子问题的洞察结果,默认使用满血版本gemini-2.5-pro模型,temperature使用0保证严谨性。对内容进行总结

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

Generate a high-quality answer to the user's question based on the provided summaries.

Instructions:

- The current date is {current_date}.

- You are the final step of a multi-step research process, don't mention that you are the final step.

- You have access to all the information gathered from the previous steps.

- You have access to the user's question.

- Generate a high-quality answer to the user's question based on the provided summaries and the user's question.

- Include the sources you used from the Summaries in the answer correctly, use markdown format (e.g. [apnews](https://vertexaisearch.cloud.google.com/id/1-0)). THIS IS A MUST.

User Context:

- {research_topic}

Summaries:

{summaries}

|

提示词包含任务描述,任务指令,上下文(对话记录)以及总结内容几个子问题的最终research结果

其中任务指令中重点强调了markdown格式的要求

总结

- Agent要快:flash模型快速的特点在Agent中特别重要,一般Agent的处理会有多次的LLM访问,如果全部使用满血的模型,很难保障用户体验

- 模型的选择:

- 在一个Agent的处理流程中,根据场景不同选择不同的模型;

- 选择多个模型可能不能使用大模型本身的多轮会话,可以在提示词中添加历史对话记录来让llm了解会话上下文,越往后需要的上下文长度越高;

- 选择刚刚好的模型,慎重选择满血大模型

- 提示词的结构:gemini的这个Agent使用的提示词主要包括几块内容

- 角色定义:角色定义一直是个比较有争议性的部分,根据模型不同场景不同可以做下尝试

- 任务描述:基本的任务定义,尽量简单清楚的描述任务需要干什么

- 任务指令:对于任务执行过程偏好性的描述,md格式列表化描述,可以包含一些当前时间等基本上下文信息

- 任务要求:对于一些需要强调的任务要求,可以单独设置一部分任务要求

- 输出格式:对于输入的内容有结构化的格式要求,可以单独定义schema,并可以结合示例说明

- 任务示例:有示例的任务可以添加示例说明,使用few-shot的方式让任务准确性更高

- 温度的选择:对于温度的选择,一般只选择0或者1,对稳定性比较高的场景选择0,对多样性要求比较高的场景选择1,很少使用其他的选项,可能因为把握不住

- Agent实现:基于LangGraph可以很快地实现各种Agent工作流,构建一个Agent并不复杂,重要的是选择好的场景,达到比较好的ROI